Hi @SMonier,

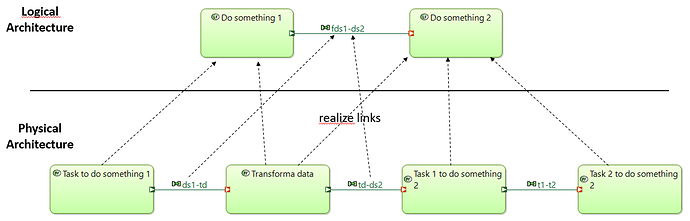

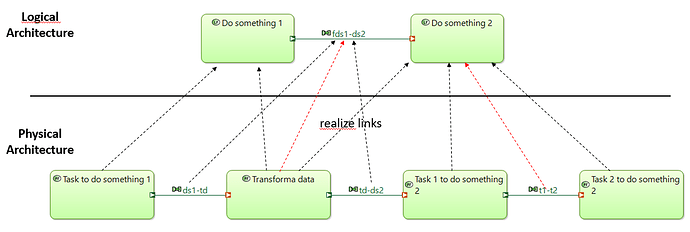

thank you for sharing your workaround. That makes perfect sense with the Transform data function.

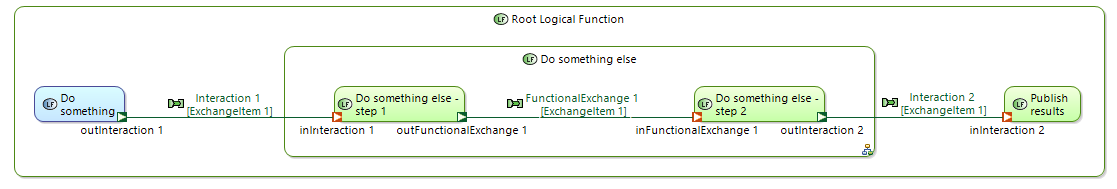

I’m dealing with functional decompositions that look like shown below:

In the transition from the SA to the LA level, Do something else gets broken down into two separate functions (which do definitely belong to Do something else as nested leaf functions and which do not belong to Publish results (unlike Transform data in your example which you traced to two different functions in the LA level):

This gives me TJ_G_04 which is exactly what @cedpei described:

FunctionalExchange 1 (Functional Exchange) is defined between Do something else - step 1 and Do something else - step 2, but there is no exchange defined between the corresponding source and target elements in the previous phase.

In fact, when combining this with exchange items, the Capella validation drives me to a direction where I don’t want to go. As shown in the screenshot above, ExchangeItem 1 gets passed through the chain of functions. Because of DCOM_13 (same exchange items on function ports and exchanges), I have to assign ExchangeItem 1 to the outFunctionalExchange 1 and inFunctionalExchange 1 ports. So far, that’s fine.

However, now I get TC_DF_11:

inFunctionalExchange 1 (Function Port) on Do something else - step 2 (Function) shall realize inInteraction 1 (Function Port) on Do something else (Function)

outFunctionalExchange 1 (Function Port) on Do something else - step 1 (Function) shall realize outInteraction 2 (Function Port) on Do something else (Function)

Here, I’m not sure if this makes perfect sense since the internal ports do not necessarily realize the external ports of the leaf functions.

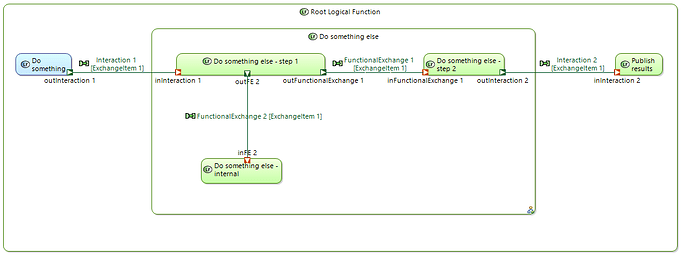

When making the example a bit more complex by adding another purely internal function Do something else - internal to allocate the sub-functions to different logical entities, I really disagree with what the validation tells me to do:

This gives me

TC_DF_11:

outFE 2 (Function Port) on Do something else - step 1 (Function) shall realize outInteraction 2 (Function Port) on Do something else (Function)

The port outFE 2 does definitely not realize the port outInteraction 2. Is this something you also see and have you found a solution to this? I’d be ok with suppressing the validation check for this specific port if that was supported. I’m not happy with globally disabling the complete check * TC_DF_11* because of this very specific single port.

Does anyone have an idea how to deal with that?

Thank you,

Jürgen